Description

The boom of the virtual personal assistant (VPA) in recent years has accelerated the integration of various smart devices into our daily lives. Centered around them, an ecosystem involving service providers, third-party developers, and end-users, has started being formulated. The developers are enabled to create applications and release them through application stores, from which the users can obtain them and then run them on smart devices. Various applications, such as Amazon Alexa skills and Google Assistant Actions, have been developed to provide a wide range of functionalities from playing music, making phone calls, to controlling smart home devices.

Although VPA services providers have required developers to provide documents to disclose their apps’ data handling practices, the feature of verbal user interaction of VPA may still put users’ privacy in risk. If VPA apps do not honestly declare their data handling practices in their privacy policy documents or collect user data that is out of their functionality needs, users’ privacy is still violated. Thus, we aim to conduct the privacy assessment and enhancement of VPA apps.

VPA apps are accompanied by a privacy policy document that informs users of their data handling practices. These documents are usually lengthy and complex for users to comprehend, and developers may intentionally or unintentionally fail to comply with them. In this work, we conduct the first systematic study on the privacy policy compliance issue of VPA apps.

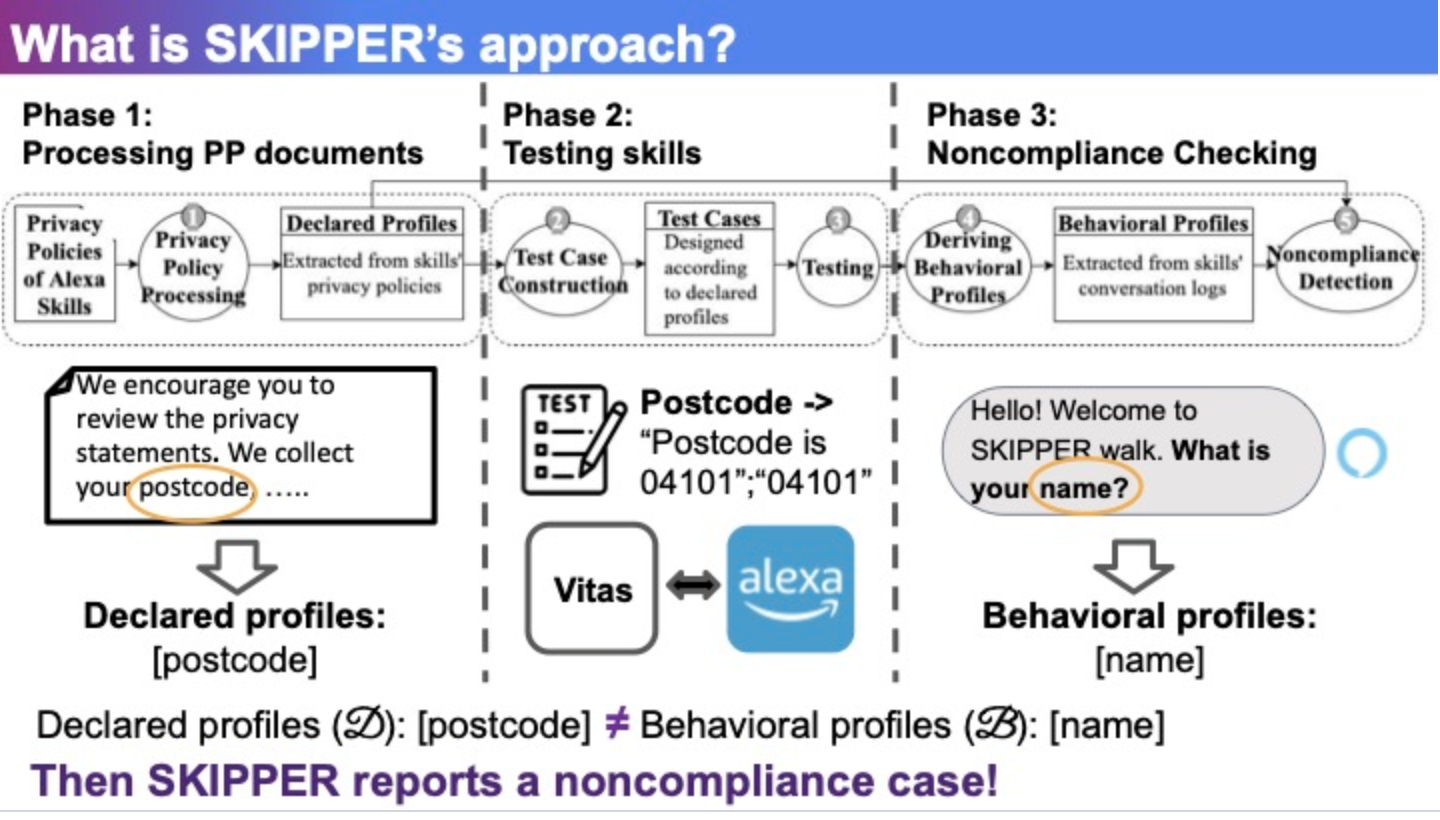

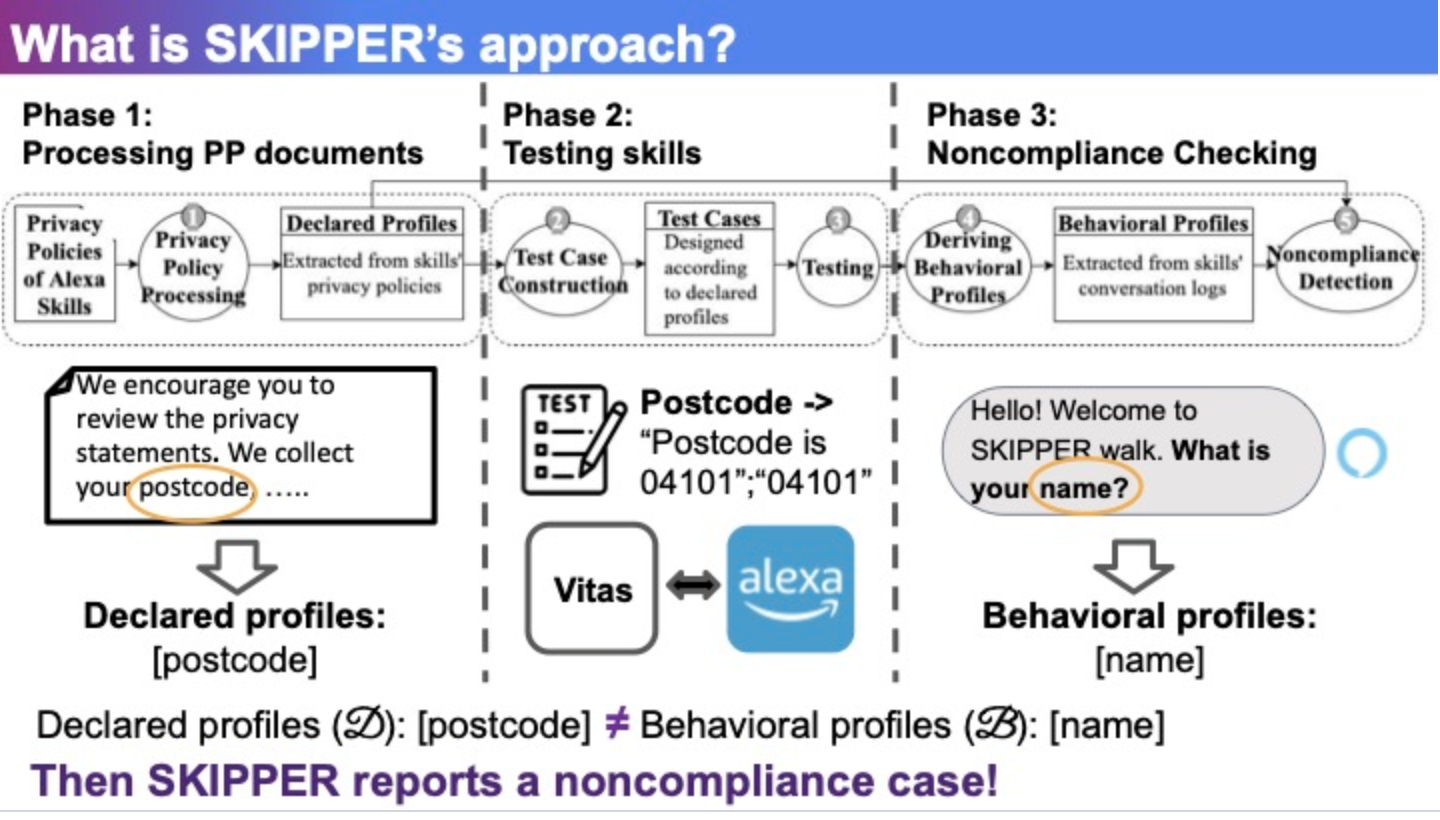

The core idea of project SKIPPER is to portray the skill as its declared privacy profile and behavioral privacy profile, and then to check these two profiles for inconsistencies. By referring to literature on privacy policy studies and reviewing privacy policy documents of major developers like Google and Amazon, we include four testable categories of privacy policies that are commonly included in privacy policy documents, including:

- PP-1 (TYPES): policies on the types of data to collect

- PP-2 (CHILDREN): policies on child protection mechanisms

- PP-3 (REGIONS): policies on protection mechanisms for special regions

- PP-4 (RETENTION): policies on data retention period.

VPA apps may request access to the user’s personal data to realize their functionality, raising concerns on user privacy. While considerable efforts have been made to scrutinize VPA apps’ data collection behaviors against their declared privacy policies or requested permissions, it is often overlooked that most users tend to ignore these elements at the installation time. Dishonest developers thus can exploit this situation by embedding excessive declarations and requests to cover their data collection behaviors during compliance auditing.

In this work, we advocate the necessity of examining the app’s data collection against its functionality, to complement existing research on VPA app’s privacy compliance. We conduct a systematic analysis on the (in)consistency between the data needed by the app’s functionality and its actual requested data. The core idea of Pico is to check the inconsistency between the skill’s data needed and data requested. Pico first clusters the skills by their functionality-related documents, and then, it checks the inconsistency issues by detecting the outliers in their requested data within each skill cluster.